For any online newsroom, A/B testing editorial articles should be high on the agenda. It’s low-hanging fruit: a small investment of time which will consistently deliver long-term gains in website performance.

Why newsrooms should A/B test all headlines

Sept. 16, 2025 by Stefan ten Teije

What does A/B testing headlines in a newsroom mean?

E-commerce companies test virtually every button and word on their websites to discover what persuades customers to buy. Large data-driven newsrooms do much the same, focusing instead on article click-through rates from the homepage. That metric reveals how attractive a story appears to readers.

The advantage is simple: the headline that performs best can also be used across newsletters or other channels. You’ve already got the evidence that it works with your audience.

Are there drawbacks to A/B testing headlines?

There are hardly any. The only requirement is that the alternative headline must be faithful to the news article and deliver on the promise it makes. Seasoned editors usually have a preference for one headline over another (and there are good reasons not to test too many at once).

The small trade-off is that during the ten or twenty minutes the test runs (depending on the setup), half your audience will see the headline you consider less strong. But this inconvenience is outweighed by the potential benefit.

How smartocto’s A/B testing feature works

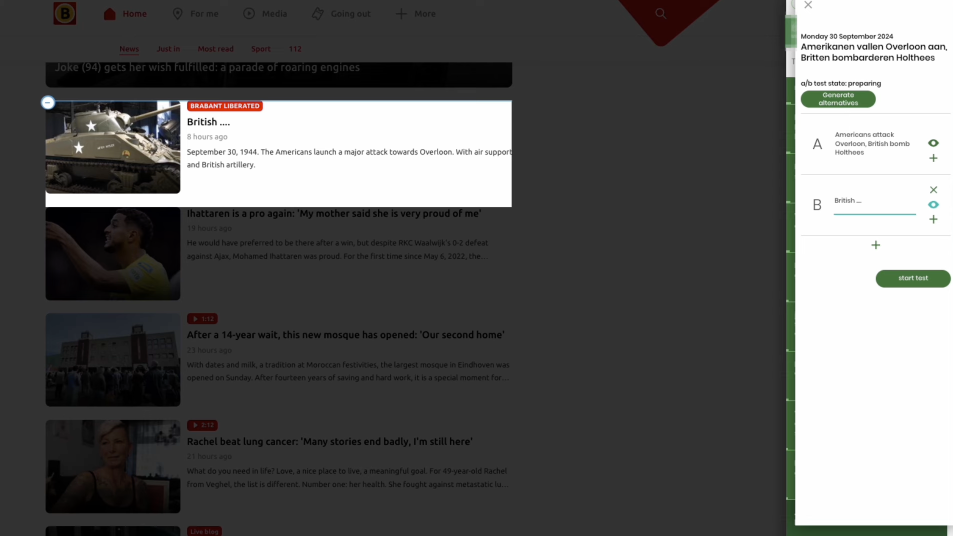

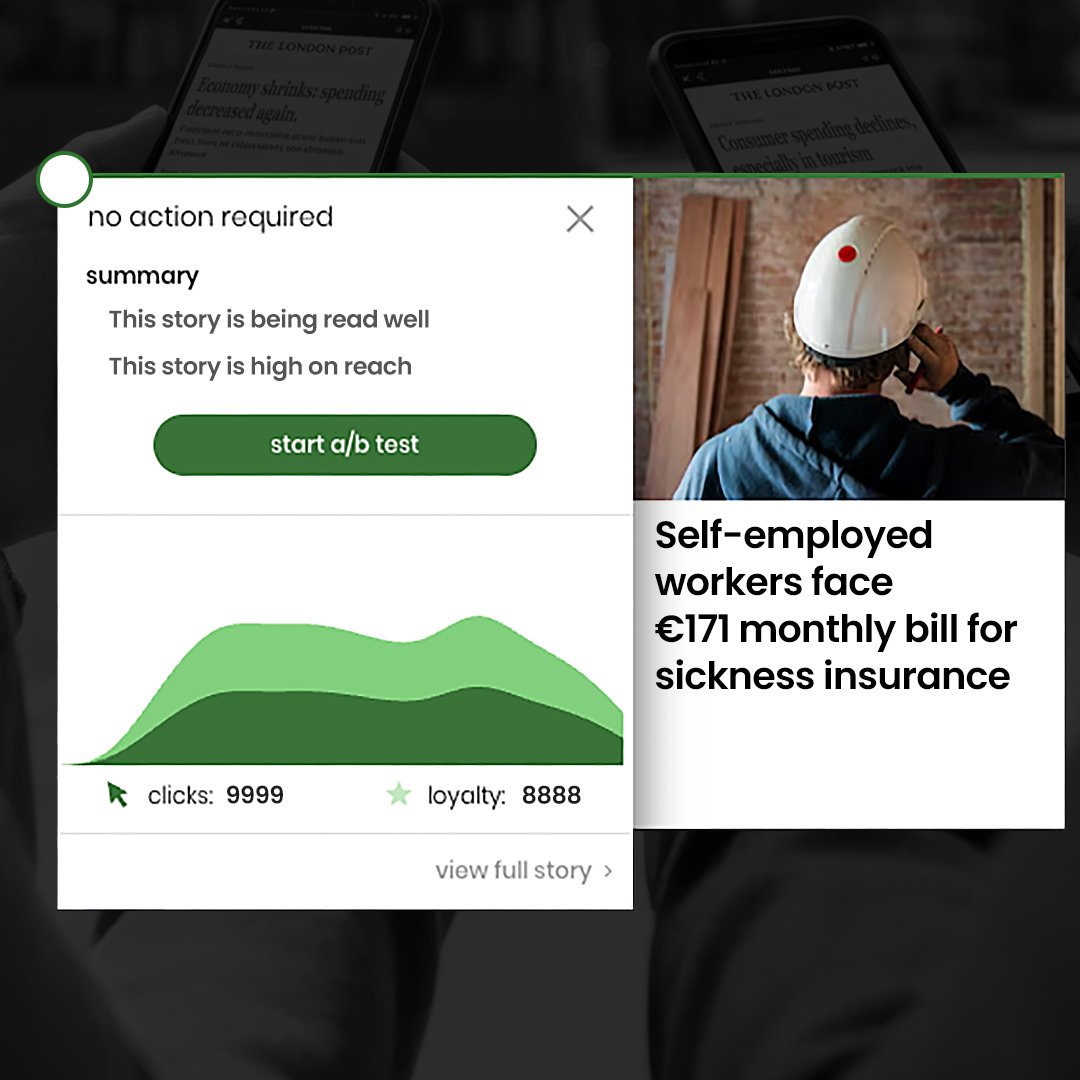

Smartocto’s Tentacles feature functions as a layer over your pages. It frames news articles into a report and adds coloured dots to the story to signal whether a story is performing well, moderately or poorly in its current position. By clicking the dot, you can see how often the story has been clicked in the last 30 minutes, and how many readers stayed longer than ten seconds (a “loyalty click”).

A short data analysis explains the behaviour: for example, that the piece is being read in depth and is drawing substantial traffic to the news website.

Once you have this, you can launch an A/B test where smartocto will show these different headline variations to different audience segments.

For a brief period, sometimes only a few minutes, smartocto will show these headline variants to different audience segments. A visitor opening the website may see something different from their neighbour opening the app.

-> If and when a clear winner emerges, the system automatically presents that headline to all new visitors.

-> If no winner can be identified within the set timeframe (say, 20 minutes), the system either advises no action or suggests running another test.

How smartocto can help you A/B test headlines, intros and images

When we think of A/B tests, it’s often headlines that we’re focused on. That’s right and proper: headlines act as a kind of triage for readers, from which people can make a decision about which news articles they want to read more of - and which ones they don’t.

- If you’re struggling to come up with alternative headline suggestions, the AI part of the feature can help you with alternatives. The video below explains more about this:

So, although most clients focus on headlines, you can harness the power of A/B testing in other ways too:

- If the article’s introduction is visible on the homepage, you can test that.

- Images can be tested as well, often as important as headlines in setting the tone. Subtle differences can make a surprising impact. News article headlines and images can also be paired more deliberately to ensure harmony between text and visuals.

However you utilise this feature, subtle differences can make a surprising impact.

What impact does A/B testing headlines have on newsroom performance?

This is where the data really helps to make the argument in favour of A/B testing matters. In the research smartocto undertook into audience behaviour, we found that winning headlines generate on average 19.3% more clicks and perform slightly better on visitor loyalty. For most newsrooms, in this context loyalty means the proportion of readers still engaged with the article after ten seconds.

This helps avoid clickbait. Headlines should attract readers to consume the article, not trick them into clicking for a shallow payoff.

Sometimes the original headline remains the audience’s favourite — unsurprising, given it would have been used without a test. In roughly a third of cases, there is no winner at all. For accuracy, we count those cases as “0% uplift”, which lowers the overall average.

Beyond clicks: other measurable effects of A/B testing

Click-throughs from the homepage are not everything. The bigger (and better) question is whether such tests improve the overall performance of a news site. Evidence suggests they do.

The logic is straightforward. Running tests on (almost) every headline validates whether readers understand the message and find it compelling. Imagine a shopping street where every shop window is a headline. Change the mannequins’ outfits, and more customers may step inside. If every shop tests systematically, the street as a whole becomes livelier and more inviting. People will visit more shops (articles) and perhaps stay longer in those shops to find the clothes they saw in the window, but also other things they like as well…

The benefits extend further. A winning headline can be reused on social media and will often appear in search results or Google Discover, already validated by your readers.

Back to the evidence: in an average newsroom (testing 20% of homepage headlines and experimenting with notifications on 30% of stories), smartocto data show uplifts of around 16% more pageviews over time and 39% more subscriptions. For smaller publishers taking their first steps in data-informed journalism, the gains can be even greater.

Three tips for effective A/B testing

Tip 1: Limit yourself to one extra headline

When reviewing your news article for spelling or flow, draft one alternative headline. Resist the temptation to create more than three versions: the system becomes cluttered, and the winner less clear.

Tip 2: Involve a colleague

Fresh eyes bring fresh ideas. Another editor does not need to supply a perfectly formulated headline; a new angle or phrasing can be enough to spark a stronger option.

Tip 3: Experiment with a single word

If you are short of alternatives, adjust just one word. One client swapped “expert” for “analyst” in a headline – a small shift that produced far better results. Sometimes nuance makes the difference.

And there are more tips…

Is your newsroom harnessing the power of A/B testing?

A/B tests on their own don’t make a newsroom successful, but successful newsrooms generally run A/B tests. The secret is that they do it as part of a broader strategy.

See how A/B tests could help your particular newsroom with your particular goals today.