Since October last year, smartocto users have had the option to test images. Many clients have started utilising this feature. The question is: what is the effect?

In order to work out if and why image testing is worth your time, a spot of data analysis is required.

The test

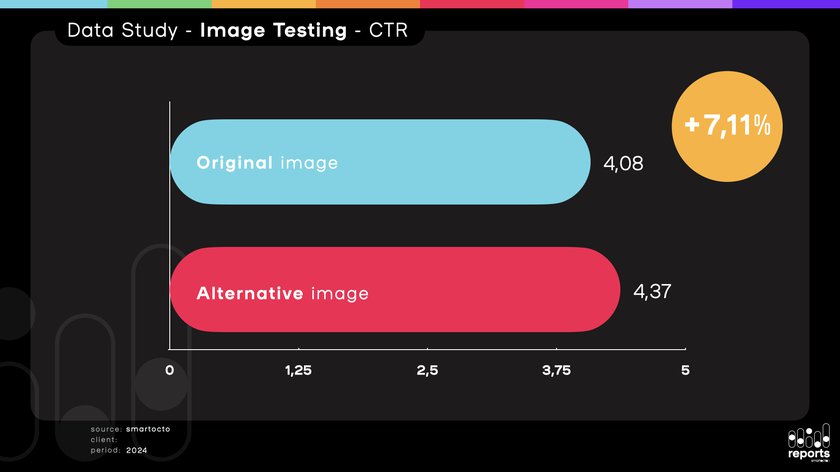

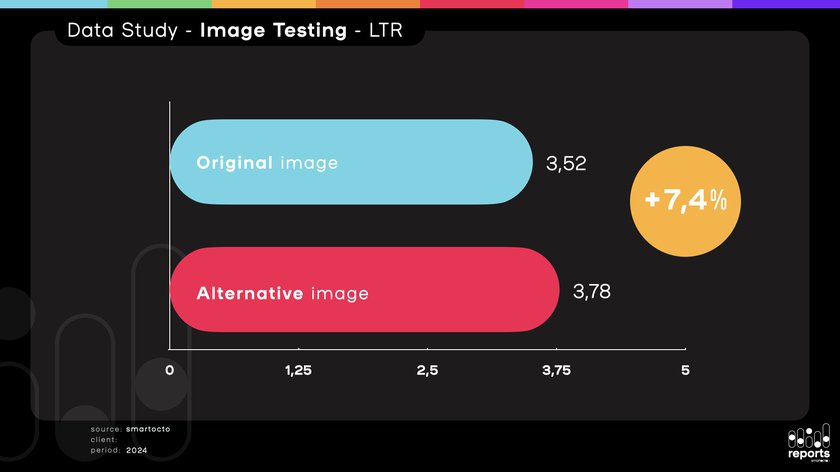

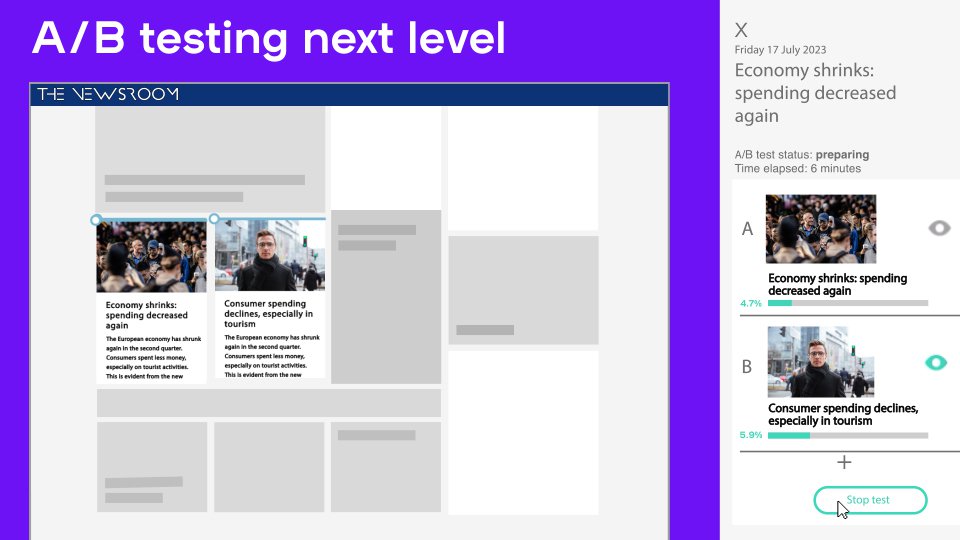

To do this we use A/B tests, where one part of the audience sees Image A, and another part sees Image B. Within a short period (perhaps as brief as 10 minutes, depending on the number of visitors), our feature Tentacles calculates which article is clicked more frequently and whether those visitors stay on the page for at least 10 seconds (loyalty clicks).

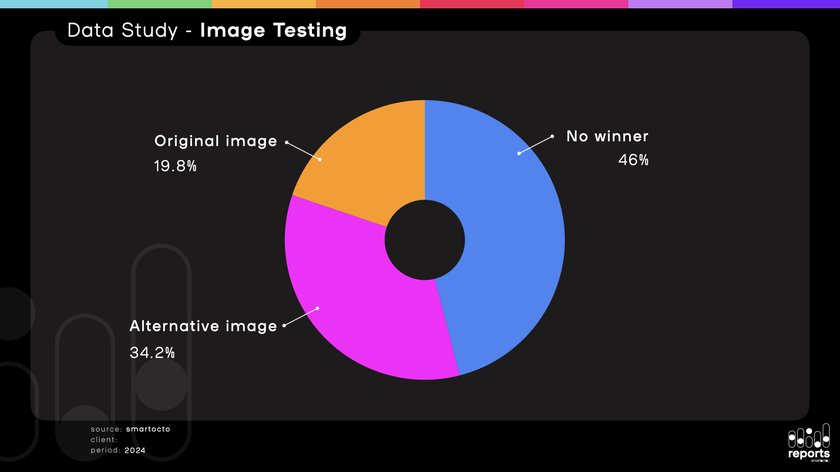

- We analysed 3,260 tests across 26 brands

- In more than half of the cases, there was a clear winner

- When no clear winner emerged, the original image remained

- When a winner did emerge, it was usually the alternative image (B, C, D, or E) that won

- In most cases, only two images were tested