Nearly every major news website relies on A/B testing for their headlines. Editors often struggle to come up with additional headlines for their articles. So, how can AI be of assistance in headline testing tools?

What follows is the result of a collaboration between an editorial analytics company, (smartocto) and a Dutch regional broadcaster (Omroep Brabant) to see if ChatGPT is able to generate compelling alternative headlines, and whether this can be integrated into the workflow of a busy news site.

Results sneak peek:

- ChatGPT often comes up with winning headlines

- There were practical challenges in using this approach

- Ultimately the final decision needs to rest with the editor

The study: compare the original with the winner

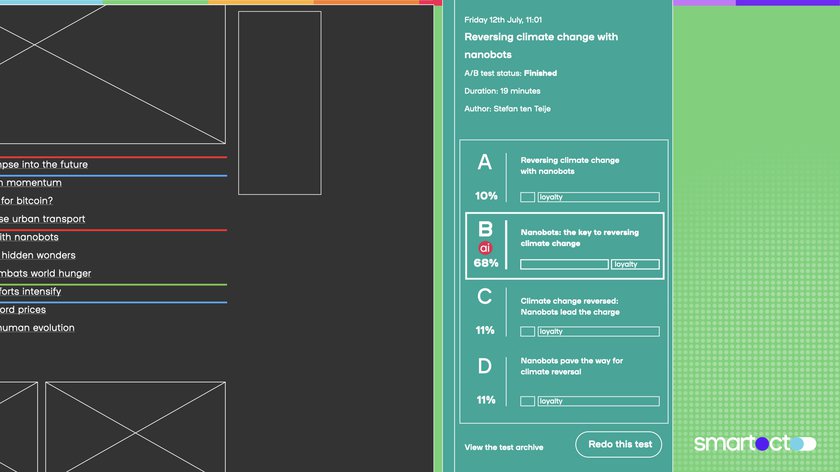

First, a baseline. To establish that, smartocto analysed nearly 9,000 articles from 57 news sites around the world published during June 2023 which had undergone conventional, editor-led A/B headline testing.

The best metric to measure impact is the Click Through Rate (CTR), representing the percentage of website visitors who clicked on the headline.

(The original headline might also be the winning headline, because we’re assuming that the original headline would have been the headline if there hadn’t been a test. When calculating ‘winners’, the percentage of ‘loyalty clicks’ was taken into account - the percentage of visitors who stayed on the page for 15 seconds or more, thus identifying clickbait)

Smartocto and Omroep Brabant are hosting a free webinar on A/B testing, where these findings will be further discussed and tips for successful implementation of this strategy shared.