Hi there,

This might be a bit philosophical or nostalgic for a Friday afternoon, but bear with me... A fever dream I had recently, about algorithms and artificial intelligence, left me with a new insight.

As a devoted (and slightly addicted) TikTok user, I think I have a fair grasp of how the algorithm works. From time to time, TikTok tests me with a new topic. If I linger for a few seconds (an eternity on the platform), the very next video is on the same subject. “You liked this, didn’t you? Well, here’s more of it!”

It works. Before I even realise it, I’ve been distracted for a quarter of an hour, or half an hour, or even longer.

But cracks appear. Sometimes I’ll see (for the third time) the same Oasis song from the same concert and I’ll pause over it. The algorithm doesn’t think, of course. And yet it behaves as if it does: “You’re definitely (maybe) an Oasis fan, here’s some more.”

But maybe that conclusion isn’t the right one. I may have paused because I noticed something unusual in the footage. By accident, I gave the machine the wrong signal – and in return, it pulled me further in, based on this false assumption.

AI is never more obviously artificially as when it misinterprets the nuanced, human signals we make. And, it’s not just a TikTok problem.

Spotify lost its spark

For years, Spotify’s algorithm delighted me with its ‘Radio’ function. Pick a song, and you’d get a playlist full of new discoveries channeling the same mood. Recently, though, something’s changed. Now the playlists recycle songs I already know – and often play.

The logic is clear: most users don’t stray too far from their tastes. They want the comfort of the familiar. Spotify doesn’t want to risk losing them, so they play it safe. But it makes the experience dull.

And then there’s ChatGPT. When I asked GPT-5 to help me translate a phrase this week, I hit a similar wall. I wanted to play with the witty aside checks notes – maybe twist it into something fresher, like (don’t need to check notes for this one). I kept asking for alternatives. The verdict, every time? The “best” option is… checks notes. Because language models aren’t designed to reward originality. They are trained to reproduce what is most probable, most common.

Here’s the insight:

Algorithms and AI can certainly work for you. But if you outsource creativity to them, you risk ending up in the realm of the majority. And if you want to break free from that, you’ll have to work at it.

Let us say something about Oracle

This week our CEO, Erik van Heeswijk, joined a WAN-IFRA webinar, where he announced a new AI feature that smartocto will begin testing later this autumn. Its project name: Oracle.

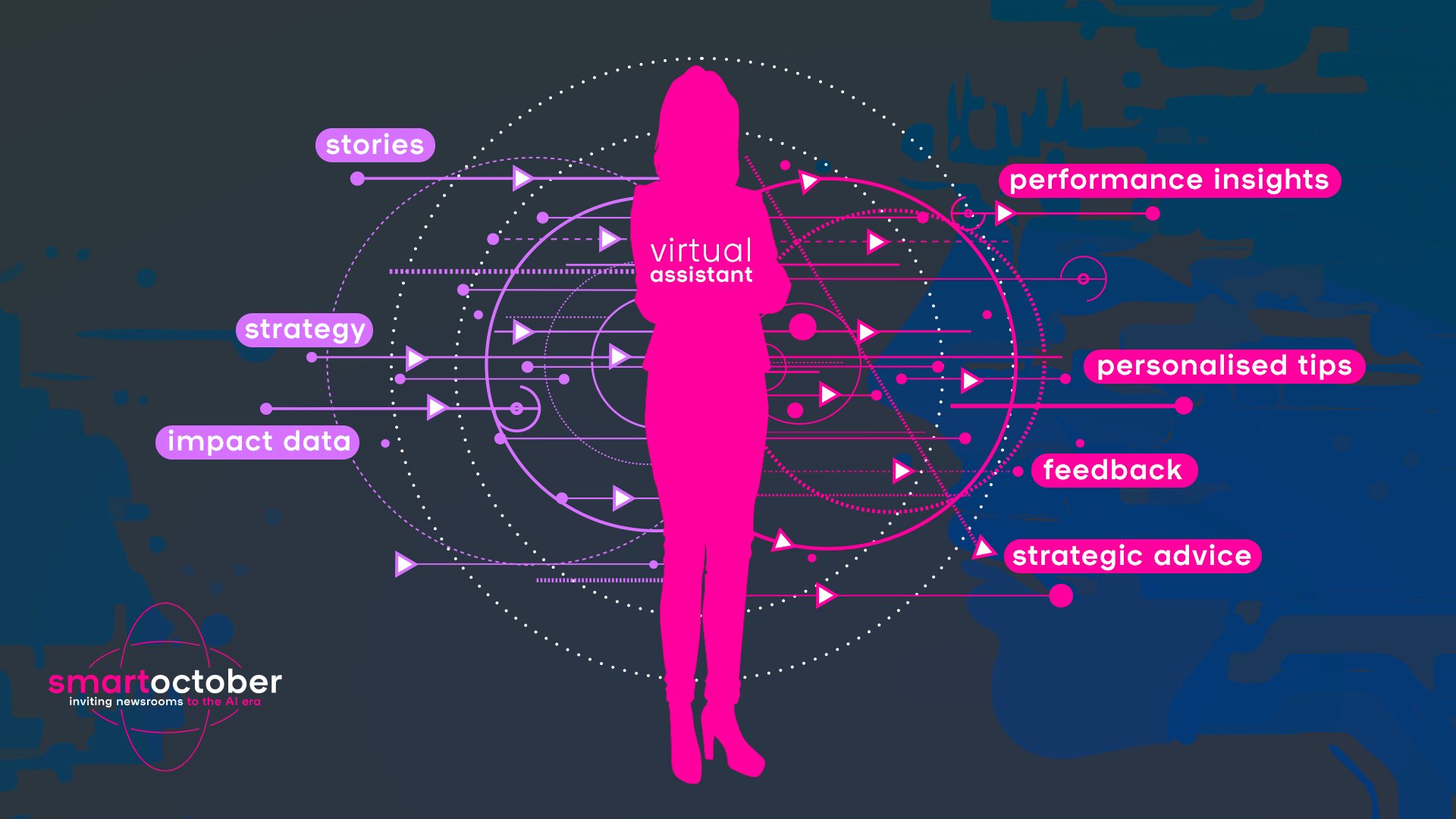

So yes, we’re also going to be going full throttle on AI, but we’re confident it can and will genuinely help our clients. Above, I described what you don’t want from such a system: cookies that all taste the same. The real challenge is to deliver the opposite: advice that is tailor-made and goes beyond the realtime, tactical, algorithmic, in-the-moment optimisations. Accordingly, we’ve developed Oracle so that it is strategic, personalised, analysis based, and surprising.

That’s the promise. You ask questions about your content strategy, and the system provides sharp, intelligent answers grounded in your own data and, crucially, your own ambitions.

The latter matters most. We can analyse your data (if you are a client, of course), but we also want to understand your goals, so that our specifically trained Large Language Model running in the background can explore your specific angles, designed to meet the unique mix of needs your audience has.

Two years ago, during ‘smartoctober’, we announced our ambition to build a ‘virtual assistant’. Since then, smartocto.ai has been helping newsrooms with follow-up story suggestions, automatic tagging and analysis of articles, and even text-improvement tips.

This year’s smartoctober will be the moment to share more about Oracle. Until then, if your curiosity is already piqued, it’s worth revisiting the blog below or get in touch if you’d like to test it out.